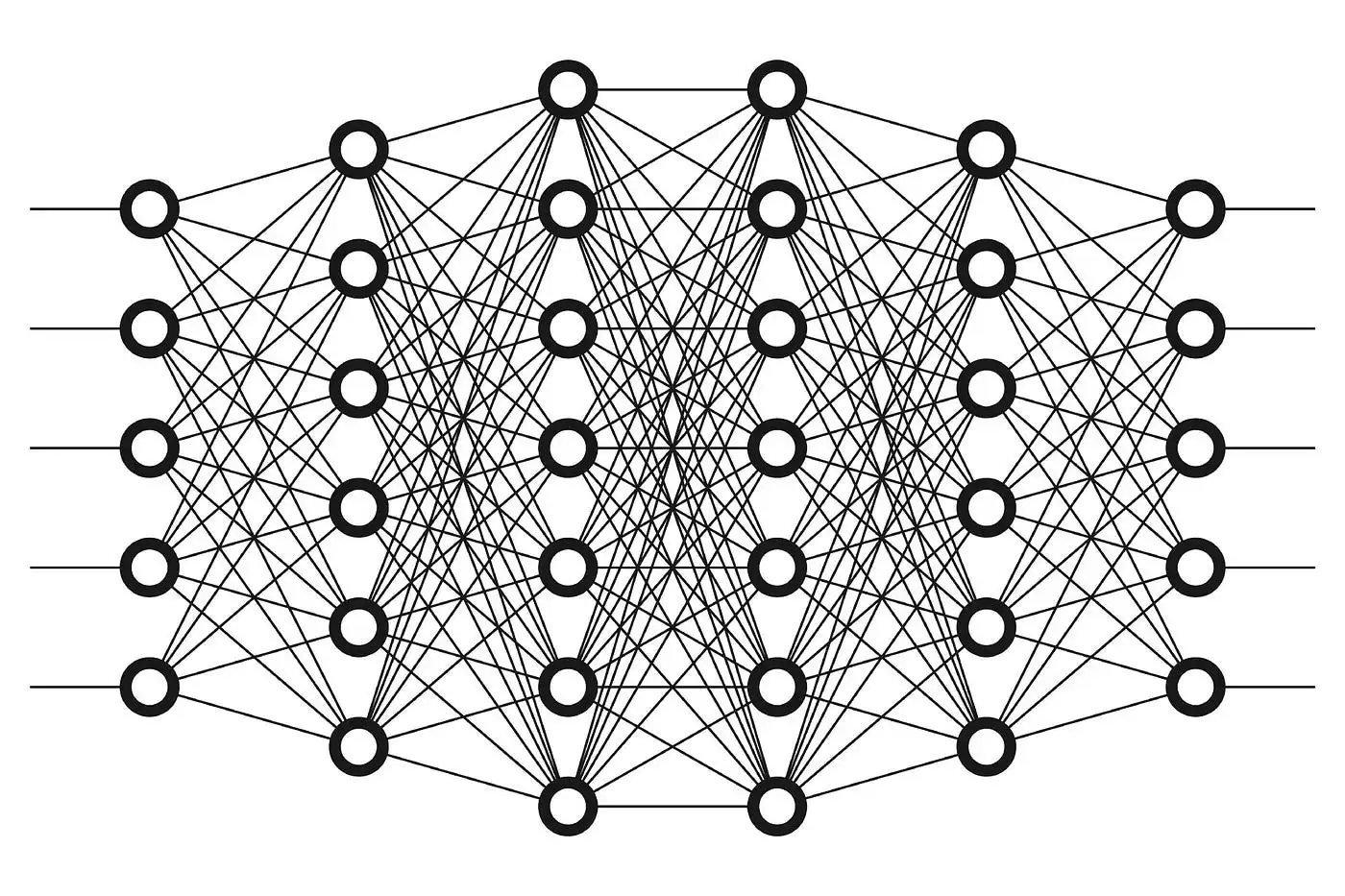

Deep learning has made remarkable strides in various domains, from computer vision to natural language processing. However, alongside its rapid progress, a looming challenge has emerged – adversarial attacks. These attacks exploit vulnerabilities in deep neural networks, raising concerns about the security and reliability of AI systems. This article explores the world of adversarial attacks and the evolving defences that are crucial in safeguarding the integrity of deep learning models.

Understanding Adversarial Attacks

Adversarial attacks are malicious attempts to manipulate or deceive deep learning models by subtly altering their inputs. These manipulations are often imperceptible to the human eye but can lead to dramatic misclassifications or other unintended behaviours in AI systems. Adversarial attacks typically fall into three categories:

White-box attacks: Attackers have full knowledge of the model architecture, parameters, and training data. This information is used to craft precise and effective adversarial examples.

Black-box attacks: Attackers have limited or no knowledge of the model but can still exploit its vulnerabilities by probing it with inputs and observing its responses.

Transfer attacks: Adversarial examples generated for one model can often fool other models, even those with different architectures, as long as they share some similarities in their training data and structure.

Common Adversarial Attack Techniques

Various techniques are employed to generate adversarial examples, including:

Fast Gradient Sign Method (FGSM): FGSM adds a small perturbation to the input data in the direction of the gradient of the loss function, causing the model to make incorrect predictions.

Projected Gradient Descent (PGD): PGD iteratively applies FGSM to an input, making small adjustments to ensure the perturbed input remains within a certain bounded region. This results in stronger and more robust attacks.

Adversarial Patch: A strategically placed, visually imperceptible patch in the input image can lead to misclassifications when processed by the model.

Transferability: Attackers can create adversarial examples on one model and transfer them to another, exploiting the shared weaknesses among models.

Defending Against Adversarial Attacks

As the cat-and-mouse game between attackers and defenders continues, several strategies have emerged to mitigate the impact of adversarial attacks:

Adversarial Training: This technique involves augmenting the training dataset with adversarial examples. By exposing the model to adversarial inputs during training, it becomes more robust to such attacks during inference.

Input Pre-processing: Methods like input scaling, randomization, and noise injection can make it harder for attackers to craft effective adversarial examples.

Robust Architectures: Designing neural network architectures with built-in defence mechanisms, such as feature denoising layers, can make models more resilient to adversarial attacks.

Gradient Masking: Techniques like gradient masking and gradient obfuscation make it harder for attackers to compute gradients and craft effective adversarial examples.

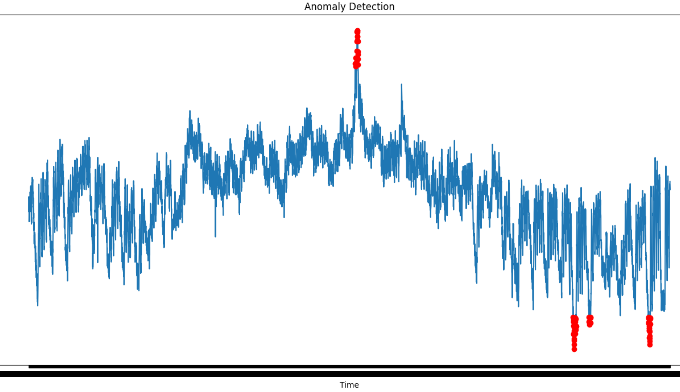

Adversarial Detection: Systems that continuously monitor model outputs for suspicious behaviour and trigger alerts when an attack is detected.

Certified Defences: These methods provide mathematical guarantees on the model’s robustness by certifying that no adversarial examples exist within a certain radius around the input.

Challenges and Future Directions

Despite progress in adversarial defence, challenges remain. Adversarial attacks continue to evolve, and the search for universally robust defences is ongoing. Additionally, many defence mechanisms may introduce performance trade-offs, making it necessary to strike a balance between accuracy and security.

In the future, research may focus on interdisciplinary approaches, combining techniques from computer vision, cryptography, and machine learning to develop more robust AI systems. Collaboration between academia and industry will be essential to address these challenges and ensure the responsible deployment of AI technology.

Conclusion

Adversarial attacks pose a significant threat to the reliability and security of deep learning models. Understanding the various attack techniques and employing effective defense mechanisms is crucial in mitigating this risk.

As AI technology becomes increasingly integrated into our daily lives, the battle against adversarial attacks remains at the heart of AI research, highlighting the need for ongoing vigilance and innovation in this ever-evolving field.

Leave a comment