In the rapidly evolving landscape of artificial intelligence and machine learning, transfer learning has emerged as a powerful technique that enables models to leverage knowledge gained from one domain and apply it to another. This approach has transformed the way we develop and train machine learning models, significantly boosting their performance and efficiency. From image recognition to natural language processing, transfer learning has proven its prowess across various domains, paving the way for exciting advancements and applications.

Understanding Transfer Learning: Unleashing the Potential

Transfer learning involves training a machine learning model on one task and then utilizing the knowledge gained to improve its performance on a different but related task. Traditional machine-learning approaches often required vast amounts of data and computational resources to achieve satisfactory results for each specific task. However, in real-world scenarios, collecting such data might be impractical or time-consuming.

Transfer learning addresses this challenge by capitalizing on the idea that knowledge gained from solving one problem can be valuable when tackling related problems. It acknowledges that while domains may differ, certain underlying patterns, features, and representations can be shared. By transferring knowledge, models can start with a head start on learning the new task, reducing the need for extensive data and computation.

Applications Across Domains

1. Computer Vision:

Transfer learning has made significant strides in the field of computer vision. Models pre-trained on massive datasets, such as ImageNet, have learned to recognize general features like edges, textures, and shapes. These features can then be fine-tuned on smaller, domain-specific datasets for tasks like object detection or image classification. This approach expedites the training process and often results in higher accuracy compared to training from scratch.

2. Natural Language Processing (NLP):

In NLP, transfer learning has revolutionized the development of language models. Models like BERT and GPT (including its variants like GPT-3) are pre-trained on vast corpora of text, enabling them to learn contextual relationships between words. Fine-tuning these models on smaller, task-specific datasets allows them to excel at tasks such as sentiment analysis, text generation, and question answering.

3. Healthcare:

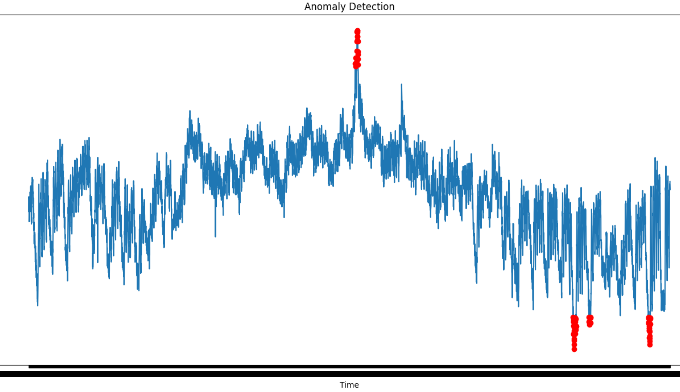

Transfer learning also demonstrates its potential in the healthcare sector. Models trained on medical images from one modality (e.g., X-rays) can be adapted to analyze images from another modality (e.g., MRIs) with relatively minimal data. This approach facilitates quicker diagnosis and aids medical professionals in identifying anomalies.

4. Autonomous Vehicles:

In the realm of autonomous vehicles, transfer learning can play a pivotal role. Models trained to recognize objects, pedestrians, and road signs in one geographic location can be fine-tuned for new environments, potentially accelerating the deployment of autonomous vehicles in diverse settings.

Overcoming Challenges

While transfer learning holds immense promise, it is not without its challenges:

1. Domain Discrepancy: Domains might differ significantly, leading to a phenomenon known as “domain shift.” In such cases, transferring knowledge might not be as effective, and additional techniques like domain adaptation might be necessary.

2. Task Specificity: While transfer learning is efficient for related tasks, applying it to vastly different tasks might not yield significant benefits. Understanding the task’s similarities and differences is crucial for successful transfer.

3. Overfitting: There’s a risk of overfitting if the transferred knowledge dominates the model too strongly. Careful fine-tuning and regularization are necessary to strike the right balance.

4. Ethical Considerations: Transfer learning also raises ethical concerns related to privacy, biases present in the pre-trained data, and the fair distribution of benefits across domains and communities.

Future Frontiers

The future of transfer learning is ripe with possibilities. Researchers are exploring ways to improve cross-domain knowledge transfer, create more efficient architectures, and develop methods that require even less labeled data for fine-tuning. Techniques like few-shot and zero-shot learning are pushing the boundaries of transfer learning by enabling models to learn new tasks with minimal examples.

As artificial intelligence continues to seep into various aspects of our lives, transfer learning stands as a beacon of efficiency and progress. Its ability to leverage existing knowledge and adapt it to new challenges is not only accelerating the pace of AI development but also making it more accessible and applicable to a wider array of domains.

As we navigate the uncharted territory of AI, transfer learning will undoubtedly remain a cornerstone of innovation.

Leave a comment