In the swiftly evolving realm of machine learning and artificial intelligence, an enduring truth remains unchanged: the quality of input data wields substantial influence over the efficacy of predictive models. Amidst the spotlight on algorithmic advancements, deep learning architectures, and computational prowess, the practice of feature engineering remains a pivotal factor in achieving optimal model performance. Feature engineering encompasses the crafting, reshaping, and curation of input features (also known as variables or attributes) that furnish machine learning algorithms with the information needed for making predictions. It serves as the conduit bridging raw data and the model’s ability to decipher and generalize underlying patterns.

Unveiling the Potential of Raw Data

Untreated data amassed from various origins, such as sensors, social media, or business transactions, often necessitate preprocessing and metamorphosis before they can be ingested by a machine learning model. This is where feature engineering steps in. By applying domain expertise and innovative thinking, data scientists can contrive features that distill pertinent insights from the raw data, enhancing its predictive potency.

For instance, in a natural language processing endeavour like sentiment analysis, the unprocessed text data can be transmuted into features such as word frequency, sentiment scores, or grammatical classifications. In the realm of computer vision, images can be transfigured into features like color histograms, edge detectors, or descriptors capturing texture. These engineered features bestow the model with a more meaningful interpretation of the latent patterns within the data, ultimately leading to heightened performance.

The Fusion of Artistry and Methodology

Feature engineering is an amalgamation of artistic intuition and scientific method. While domain proficiency steers the creation of pertinent features, scientific rigor guarantees that these features possess statistical significance and wield a positive impact on the model’s performance. Transformations can encompass scaling, normalization, and amalgamation of data to align features on a commensurate scale, rendering them suitable for algorithms sensitive to disparities in feature magnitudes. These transformations not only expedite model convergence but also forestall dominant features from overshadowing others.

Moreover, feature engineering assists in grappling with quandaries like multicollinearity, where two or more features exhibit high correlation, potentially precipitating instability in model estimations. By contriving novel features that encapsulate the essence of correlated information, data scientists can alleviate this predicament and forge a sturdier model.

Discerning Excellence: Feature Selection

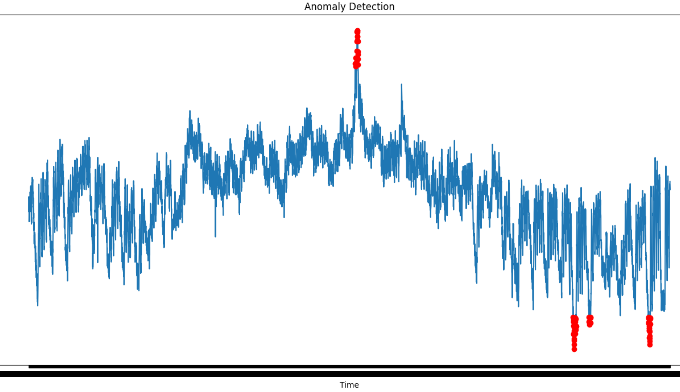

All features are not crafted equal. Some may be superfluous, irrelevant, or tainted with noise, contributing minimally to the model’s predictive prowess. Feature selection entails the identification and retention of solely the most enlightening features while discarding the rest. This not only simplifies the model but also mitigates the risk of overfitting. It is a phenomenon wherein a model performs admirably on training data but falters when confronted with fresh, unseen data.

Techniques for feature selection span from straightforward methods such as correlation analysis and iterative feature elimination to more intricate approaches like Lasso regularization and gauging feature importance through tree-based methodologies. These techniques facilitate the creation of concise models that encapsulate fundamental patterns sans unnecessary complexity.

Confronting the Challenge of High-Dimensional Data

In the era of voluminous data, feature engineering emerges as even more critical due to the curse of dimensionality. When grappling with an extensive array of features, models tend to grapple with amplified computational requisites, heightened vulnerability to overfitting, and compromised generalization. Meticulously designed features encapsulating the most pertinent information can ameliorate these predicaments by providing a succinct representation of the data’s intricacies.

Automating Feature Engineering

As the landscape of machine learning advances, automation and artificial intelligence are being harnessed to expedite the feature engineering process. Automated machine learning (AutoML) platforms aim to pare down the manual labuor demanded in feature selection and engineering. These platforms leverage techniques like genetic algorithms, Bayesian optimization, and neural architecture search to autonomously identify and fashion valuable features from raw data.

In the expedition from raw data to predictive insights, feature engineering emerges as a pivotal intermediary. It transmutes raw data into meaningful representations, grapples with issues of dimensionality and multicollinearity, and ensures that models can glean insights from pertinent patterns. With the ascent of automated feature engineering and an ever-expanding toolkit of techniques, the field continues to evolve, promising enhanced model performance and augmented decision-making across diverse domains.

Practitioners in the realms of data science and machine learning must acknowledge the pivotal role that feature engineering embodies and persistently refine their proficiency in this blend of artistry and science.

Leave a comment